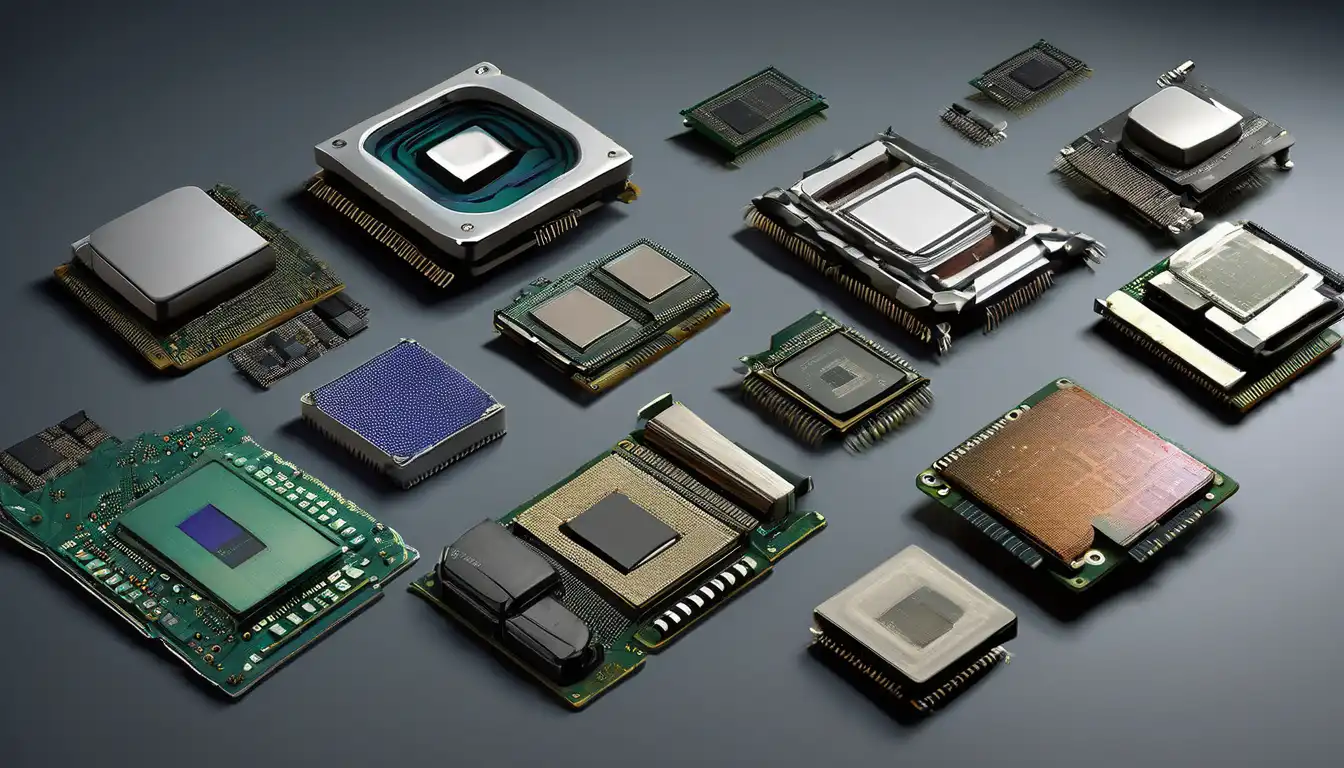

The Dawn of Computing: Early Processor Beginnings

The evolution of computer processors represents one of the most remarkable technological journeys in human history. Beginning with room-sized machines that could barely perform basic calculations, processors have transformed into microscopic marvels capable of billions of operations per second. This transformation didn't happen overnight—it unfolded through decades of innovation, breakthroughs, and relentless pursuit of computational power.

In the 1940s, the first electronic computers used vacuum tubes as their primary processing components. These massive machines, such as the ENIAC (Electronic Numerical Integrator and Computer), contained approximately 17,000 vacuum tubes and weighed over 27 tons. Despite their enormous size, they operated at speeds measured in kilohertz and required constant maintenance due to tube failures.

The Transistor Revolution

The invention of the transistor in 1947 at Bell Labs marked the first major turning point in processor evolution. These semiconductor devices replaced bulky vacuum tubes, offering smaller size, lower power consumption, greater reliability, and faster switching speeds. The transition from vacuum tubes to transistors paved the way for the second generation of computers in the late 1950s and early 1960s.

Transistor-based computers were significantly more compact and efficient than their predecessors. IBM's 7000 series and DEC's PDP-1 exemplified this new era of computing, bringing computational power to universities and research institutions. However, each transistor still had to be manufactured and connected individually, limiting the complexity of circuits that could be practically built.

The Integrated Circuit Era

The development of the integrated circuit (IC) in 1958 by Jack Kilby at Texas Instruments, and independently by Robert Noyce at Fairchild Semiconductor, revolutionized processor design forever. Integrated circuits allowed multiple transistors to be fabricated on a single silicon chip, dramatically reducing size, cost, and power requirements while improving reliability.

The 1960s saw the emergence of small-scale integration (SSI) and medium-scale integration (MSI) chips, containing tens to hundreds of transistors. This period also witnessed the birth of the microprocessor concept, though practical implementation would take another decade. The foundation was being laid for the personal computing revolution that would transform society.

The First Microprocessors

1971 marked a watershed moment with Intel's introduction of the 4004, the world's first commercially available microprocessor. This 4-bit processor contained 2,300 transistors and operated at 740 kHz—modest by today's standards, but revolutionary at the time. The 4004 demonstrated that complete central processing units could be manufactured on a single chip.

Intel followed this success with the 8-bit 8008 in 1972 and the groundbreaking 8080 in 1974. The 8080 became the heart of early personal computers like the Altair 8800, sparking the hobbyist computing movement. Meanwhile, competitors like Motorola entered the market with processors such as the 6800, establishing the competitive landscape that would drive rapid innovation.

The x86 Architecture Dominance

Intel's 8086 processor, introduced in 1978, established the x86 architecture that would dominate personal computing for decades. This 16-bit processor addressed up to 1MB of memory and introduced features that would become standard in future designs. The 8088 variant, used in IBM's first personal computer in 1981, cemented x86's position in the market.

The 1980s witnessed rapid advancement in processor capabilities. Intel's 80286 (1982) introduced protected mode operation, while the 80386 (1985) brought 32-bit computing to the mainstream. Each generation offered significant performance improvements while maintaining backward compatibility—a key factor in x86's enduring success.

The Clock Speed Race

During the 1990s, processor evolution became characterized by the "megahertz war" as Intel and AMD competed to deliver ever-higher clock speeds. The Intel Pentium processor (1993) introduced superscalar architecture, capable of executing multiple instructions per clock cycle. Subsequent generations like the Pentium Pro, Pentium II, and Pentium III incorporated increasingly sophisticated features including out-of-order execution and SIMD instructions.

By the late 1990s, processors had surpassed the 1 GHz barrier, but physical limitations began to emerge. Power consumption and heat generation became critical challenges as transistor densities increased. The industry was approaching the limits of traditional scaling methods.

The Multi-Core Revolution

The early 2000s marked a fundamental shift in processor design philosophy. Instead of focusing solely on increasing clock speeds, manufacturers began integrating multiple processor cores on a single chip. This approach addressed the thermal and power constraints that had stalled the clock speed race while delivering substantial performance gains for multitasking and parallel workloads.

Intel's Core 2 Duo (2006) and AMD's Athlon 64 X2 demonstrated the effectiveness of multi-core architectures. Today, consumer processors commonly feature 4 to 16 cores, while server processors may contain 64 cores or more. This paradigm shift represents one of the most significant innovations in processor design since the integrated circuit itself.

Specialization and Heterogeneous Computing

Modern processor evolution has embraced specialization to meet diverse computational needs. Graphics Processing Units (GPUs) have evolved from simple display controllers to massively parallel processors capable of handling complex mathematical workloads. Meanwhile, specialized processors for artificial intelligence, cryptography, and networking have become increasingly common.

The rise of System-on-Chip (SoC) designs has integrated CPUs with GPUs, memory controllers, and other components on a single die. This approach, popularized by mobile devices, offers improved power efficiency and compact form factors. Apple's M-series processors demonstrate how SoC designs can deliver exceptional performance while maintaining energy efficiency.

Current Trends and Future Directions

Today's processor evolution focuses on several key areas beyond traditional performance metrics. Energy efficiency has become paramount for mobile and data center applications. Security features like hardware-enforced memory protection and encryption acceleration address growing cybersecurity concerns. Machine learning acceleration represents another frontier, with dedicated tensor cores becoming standard in modern processors.

The industry continues to push the boundaries of semiconductor manufacturing, with current processes at 3nm and smaller. However, physical limitations are prompting exploration of alternative materials like gallium nitride and silicon carbide, as well as three-dimensional chip stacking techniques.

The Quantum Computing Horizon

Looking forward, quantum processors represent the next potential revolution in computing. Unlike classical processors that use bits (0 or 1), quantum processors use qubits that can exist in multiple states simultaneously through superposition. While still in early stages, quantum computing promises to solve problems intractable for classical computers, particularly in fields like cryptography, drug discovery, and optimization.

Meanwhile, neuromorphic computing—inspired by the human brain—offers another promising direction. These processors mimic neural networks, potentially offering massive parallelism and energy efficiency for specific AI workloads. As classical computing approaches fundamental physical limits, these alternative architectures may define the next chapter of processor evolution.

The journey from vacuum tubes to modern multi-core processors demonstrates humanity's relentless pursuit of computational power. Each generation built upon the innovations of its predecessors while overcoming new challenges. As we stand on the brink of quantum and neuromorphic computing, the evolution of processors continues to shape our technological future in ways we can only begin to imagine.